Let's say we have some data samples in dimensions. Defining a distance metric in to capture the relationship between two points and is an important problem. If we simply take the space to be Euclidean, we can then directly compute the Euclidean distance as

Alternatively, we can also consider the Mahalanobis distance. The Mahalanobis distance is the distance between two points and given that the points follow a Gaussian distribution. it is defined as

Where is the covariance matrix of the Gaussian distribution.

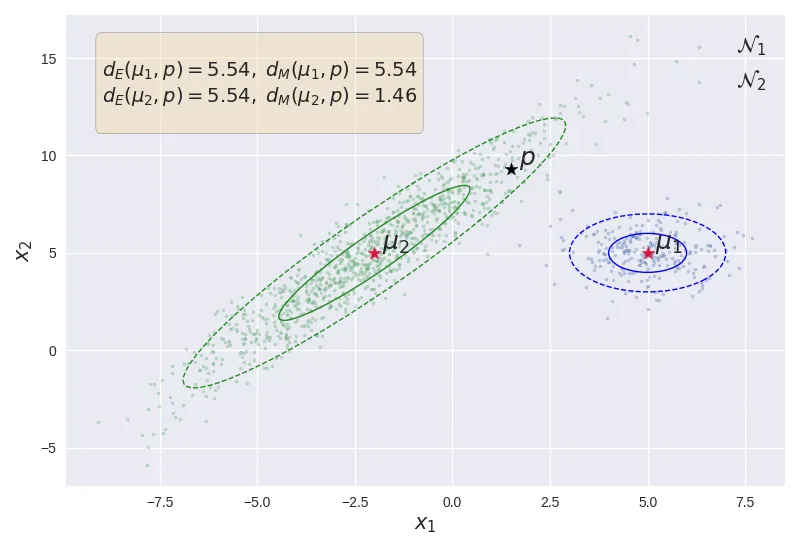

Illustration of Mahalanobis distance and Euclidean distance.

The above figure shows the effect of the underlying Gaussian distribution on the distance metric. Although the point is equidistant from both the points and , the Mahalanobis distance is less than owing to the fact that (shown in green) has a larger variance compared to (shown in blue). As shown, lies within the of compared to away from .

Also, note that . This indicates that the euclidean distance actually assumes that the underlying data generating distribution is a standard normal distribution (i.e. unit variance across all dimensions, with no correlation). Assuming that the underlying distribution is Gaussian, the Mahalanobis distance can be seen as a generalization of the Euclidean distance from the standard Gaussian to any given covariance matrix.

Extending to GMMs

How about a mixture of Gaussians? The Gaussian Mixture Model (GMM) is simple a weighted sum of independent Gaussian distributions. It can capture more complex distributions compared to simple Gaussian distribution without needing sophisticated computations.

Consider the GMM defined as

Where is the Gaussian distribution with parameters and is the coefficient of the Gaussian.

To extend the idea of Mahalanobis distance to GMMs, we first need a concrete and a general idea about the distance metric - one that generalizes to any given manifold or distribution and not just a Gaussian. Such a distance is called Riemannian distance.

The local distance between two points and is determined by the space that it lies in. The data always lies on a manifold. The manifold might be of the same dimension as the ambient space itself, or may even have a lower dimension. This manifold is determined by the data-generating distribution . The data is sampled from this data distribution which defines the manifold in which the data exists[1]. Therefore, the Riemannian distance is given by the path integral between along the manifold, weighted by the local Riemannian metric .

Where is the local Riemannian metric induced by the data generating distribution . You can think of it being a generalization of the covariance matrix to arbitrary distributions. In fact, the above matrix is called as a Fisher-Rao metric. The Fisher-Rao metric is a Riemannian metric on finite-dimensional statistical manifolds. The Riemannian metric is rather far more general.

Simplifying Riemannian Distance

The above Riemannian distance is generally intractable and the Fisher-Rao metric is also computationally expensive. There is, however, an alternate way proposed by Michael E. Tipping[2]. Instead of the above Fisher-Rao metric, we can construct an alternate Riemannian metric for GMMs as

Which is simply the average of the individual inverse covariance matrices. To circumvent the intractability of equation (4), Tipping offers a tractable approximation that yields a distance metric of the same form as (2).

Essentially we have moved the path integral inside the square-root where it is entirely captured by the local Riemannian metric . Moving from , the Riemannian metric becomes Where the path integral term computes the density along . If we assume that and are close to each other and that the geodesic distance can be approximated by a straight line, then

We can make some observations about the above result. First, for , the above equation yields the Mahalanobis distance as expected. The Riemannian metric only depends on the Gaussian components that have non-zero value along the path , so there are no spurious influences from other component densities. Lastly, just like Mahalanobis distance the above GMM-distance is also invariant to linear transformations of the variables.

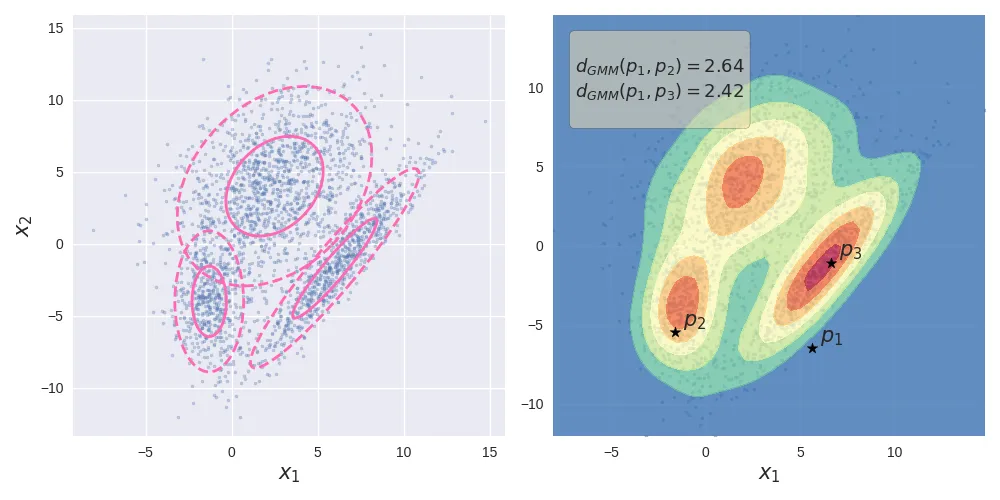

Gaussian Mixture Model. The left image shows the data generated by a mixture of 3 Gaussians. The image of the right shows the probability density along with the GMM-distances of 3 points.

import numpy as np

from scipy import special

def GMMDist(x1: np.array, x2:np.array,

mu: np.array, Sigma:np.array, lambdas:np.array) -> float:

"""

Computes the Riemannian distance for Gaussian Mixture Models.

Args:

x1(np.array): Input point

x2 (np.array): Input point

mu (np.array): List of mean vectors of the GMM

Sigma (np.array): List of Covariance matrices of the GMM

lambdas (np.array): Coefficients of the Gaussiam mixtures

Returns:

float: Riemannian distance between x1 and x2

for the given GMM model

"""

v = x2 - x1

K = len(Sigma) # Number of components in the mixture

S_inv = np.array([np.linalg.inv(Sigma[i]) for i in range(K)])

# Path Integral Calculation

path_int = np.zeros(K)

def _compute_k_path_integral(k:int):

a = v.T @ S_inv[k] @ v

u = x1 - mu[k]

b = v.T @ S_inv[k] @ u

g = u.T @ S_inv[k] @ u

# Normalization Constant

Z = -g + (b**2 / a)

const = np.sqrt(np.pi / (2* a))

path_int[k] = const * np.exp(0.5 * Z)

path_int[k] *= (special.erf((b+a)/np.sqrt(2 * a)) -

special.erf(b/np.sqrt(2 * a)))

# Compute the path integral over each component

for k in range(K):

_compute_k_path_integral(k)

# Reweight the inverse covariance matrices with the

# path integral and the mixture coefficient

w = lambdas * path_int

eps = np.finfo(float).eps

G = (S_inv*w[:, None, None]).sum(0) / (w.sum() + eps)

return np.sqrt(np.dot(np.dot(v.T, G), v))

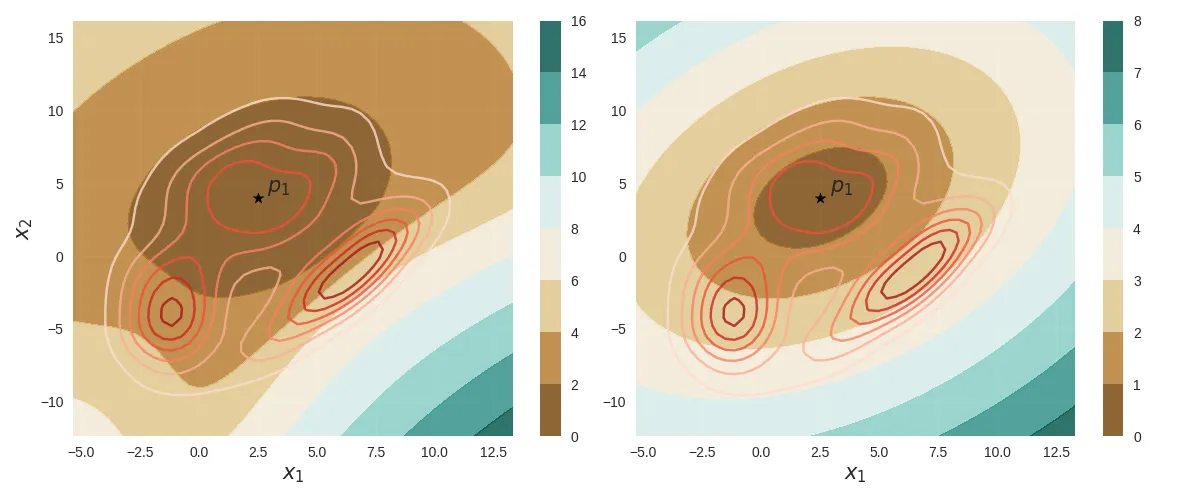

Finally, the following figure compares the contour plots of both the GMM Riemannian distance (left) and the the Mahalanobis distance (right) from the point with the same underlying probability density (shown as red contour lines).

Comparison between GMM Riemannian distance (left) and Mahalanobis distance (right) from the point for the same underlying densit.

The clustering problem is a direct application of the above distance metrics - more specifically the class of model-based clustering techniques. Given some data samples, we fit a model (inductive bias) - say a Gaussian or a GMM, and we can use the above distance metrics to group them into clusters. Or even detect outliers.

| [1] | As long we are dealing with data samples, it is almost always good to think of the data as samples from some data-generating distribution and that probability distribution lies on a manifold called statistical manifold. This idea has been quite fruitful in a variety of areas within ML. |

| [2] | Tipping, M. E. (1999). Deriving cluster analytic distance functions from Gaussian mixture models. Link. |