Lossless compression is a family of techniques to compress or encode a given random data or source. It is sometimes called as source coding or entropy coding in literature. The term "entropy coding" stems from Shannon's source coding theorem, informally stated as follows.

Source Coding Theorem: Given discrete i.i.d random samples each with a fixed set of possible outcomes , then they can be compressed without any loss in information in no less than bits as . Here the entropy is given by Here is the information content of each outcome.

In other words, if is losslessly compressed to , then entropy is preserved. i.e. . The source coding theorem imposes a fundamental limit on a given data or message up to which it can be compressed without any loss in information. In literature the compressed mapping of each possible outcome is called as the codeword. The above limit specifies the smallest theoretical length of the codeword in bits. An example of a common entropy coding method is the Huffman coding.

A Huffman coding is a simple method where the given outcomes are mapped directly to codewords uniquely. However, the crux of the method that makes it close in on the information limit is that it employs variable lengths for each codeword depending on its probability . More probable an outcome, smaller is its code length and vice versa. For a good explanation of the Huffman coding, refer to Chris Olah's article.

In the recent years, there has been much development in newer entropy coding techniques that perform better than Huffman coding [1]. In this post, we shall take a practical approach to study one recent entropy coding method - the Asymmetric Numeral Coding.

Problem Setup

Problem : Let us say you are keeping track of an incoming stream of bits on a small piece of paper. Obviously there is no room on the piece of paper to write down all the bits. How do you then keep track of it? By keeping track we mean that at any given moment, your log must reflect all of the past information.

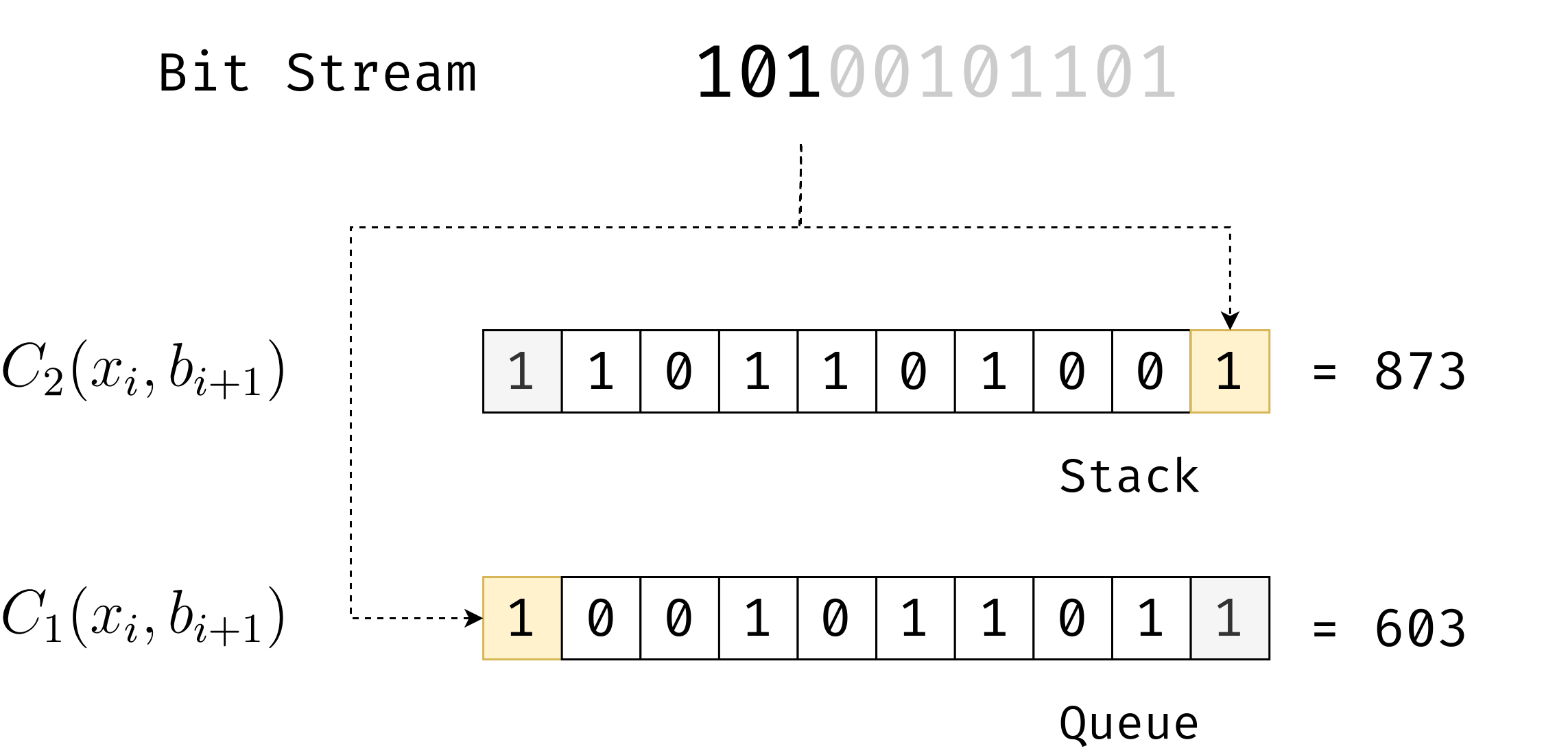

Assume that we start tracking the bit at time step (represented as ) with an initial symbol . When the next bit comes in, we must update our symbol to . One simple way to update this is start with and at each time step, update as

This essentially results in the decimal representation of . This method, apart from being lossless and fast (powers of 2 can be computed using bit-shifts), has an interesting property - the range of values is being altered at every time step. Note that in the above equation, the incoming bit is being added to the most significant-bit (MSB) of . Therefore, each such update increases the range.

Alternatively, the incoming bit can be added to the least significant-bit (LSB) such that the range is kept the same but the number alternates between odd and even (even number if is 0 and odd if 1). This can be written as

In other words, determines whether will be even or odd. In entropy coding, equation (2) is used in the well-known Arithmetic Coding (AC) technique while equation (3) is used in Asymmetric Numeral Systems(ANS). AC requires that the bits come in from LSB to MSB, while ANS requires from MSB to LSB. This fundamental difference lies in they way they are implemented. By default, all streaming data comes from MSB to LSB - AC implements like a queue (first-in-first-out) while ANS implements like a stack (last-in-first-out). Note that both methods are equally fast - both and can be computed through bit-shift operations.

The fundamental idea behind both is that, given a symbol , if one needs to add an incoming bit without any loss in information, any coding method should increase the information content should increase no more than the information content in the incoming bit.

Where is the probability distribution of the bits. This means that any encoding function must be of the form If the bits are equally probable, i.e. , then the equation (3) is optimal in the sense that it satisfies the encoding condition .

The decoding function for can be constructed as

A point to note here is that neither of the above two encodings are optimal as they are at least 1 bit larger than the shannon entropy. This is because we start with . This is to differentiate '0' from '00' etc.

An easily overlooked aspect about the above encodings is that they amortize the coded result over the bits. That is, each bit is not mapped to one number, rather a bunch of bits are mapped into a single number. There is no one-to-one mapping, rather the information is distributed over the entire codeword. Therefore it has potential to be better than bit number mapping. This amortization is the basis for all entropy coding methods as it indicates a potential technique get close to the Shannon entropy of the input [2].

# Code for binary -> natural number coding

# Bit Tricks:

# (a << b) <=> a * (2 ** b)

# (a >> b) <=> a / (2 ** b)

# (a % (2 ** b)) <=> (a & ((1 << b) - 1))

def C2(bitstream): # Encode

"""

Encodes a list of bits (given in reverse order) to a Natural number.

Ex: bitstream = [1, 0, 0, 1, 0, 1, 1, 0, 1].reverse() -> 873

"""

x = 1

for b in bitstream:

x = (x << 1) + b

return x

def D2(x): # Decode

"""

Decodes the given coding into a list of bits.

Ex: x = 873 -> [1, 0, 0, 1, 0, 1, 1, 0, 1]

"""

bits= []

while x > 1:

bits.append(x % 2)

x = x >> 1

return bits

# We convert binary to decimal here. Is it possible change the base?

# Can you encode using different bases at each time step?

Asymmetric Numeral Coding

Asymmetric Numeral System (ANS) builds upon the coding scheme and generalizes it. The above two coding schemes are sometimes referred as Positional Numeral Systems (PNS) and ANS can be viewed as the generalization of positional numeral systems. One concern in the PNS scheme is that must have a finite range. As the bits keep coming in, cannot be arbitrarily large in practice. Thus we need to address two issues -

- Enforcing to be within some finite interval of natural numbers.

- Within that finite interval, the distribution of even and odd codings must reflect the corresponding probabilities of the bits. Let's address the latter issue first.

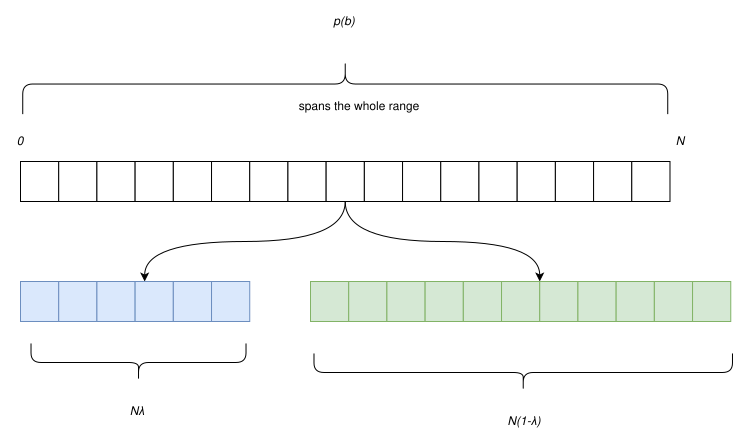

Consider equation (3) again. As discussed earlier, it is optimal only when . We can say that the bit probability splits and uniformly covers the range of , which is the set of all natural numbers . In the case , the range is split into two parts - even and odd numbers depending on the value of . As such is the number in the even/odd set.

Extending this intuition to unequal probabilities, is the number in the subset defined by . For this, the range of must be split into two subsets whose size reflects the probability of thier respective state. If the probability of 1 is and let the range of be the set , then we want odd numbers in . The operator accounts for the fractional components as we shall stick to natural numbers. We want a generalized coding method such that the following equality is satisfied.

Say we updated using some encoding. Now, the proportion of odds is . However, the coding must be robust to the ceil operation. For example, for say we compute , then we have odd values in the range (0,6]. Now which means . Therefore, cannot be a correct code for . On the other hand, is a valid code and can be verified similarly. The idea behind equation (7) is that by doing ceil operation (we will use more of those below), we are essentially rounding the computation results; and when done sequentially, the error may blow up resulting in incorrect code that may not be uniquely reversible i.e. loss of information.

We can now come up with a coding scheme that accounts for any arbitrary binary distribution , satisfying equation (7). If the probability of 1 is , then

It is easy to verify the above equation with equation (3) for . The decoding function can be derived as

Observe that decoding is essentially through equation (7). The above encoding scheme is called as uniform Asymmetric Binary Systems (uABS) as we exploit the asymmetry in the probabilities of the bits to get compression, while the subsets segmented by those probabilities are themselves uniformly distributed.

Let us take an illustrative example - Consider the bitstream 1, 0, 0, 1, 0 with . This can be encoded using equation (8) as follows (note that we encode in the reverse order)

Similarly decoding can be done as

And we get back our code in its original order.

# Code for generalized binary -> natural number coding

import math

def C2(bitstream, p: float = 0.5): # Encode

"""

Encodes a list of bits (given in reverse order) to a

Natural number with probability p.

"""

x = 1

for b in bitstream:

if b:

x = math.floor(x / p)

else:

x = math.ceil((x + 1) / (1 - p)) - 1

return x

def D2(x, p:float = 0.5): # Decode

"""

Decodes the given coding into a list of bits with probability p.

"""

bits= []

while x > 1:

b = math.ceil((x + 1) * p) - math.ceil(x * p)

bits.append(b)

if b:

x = math.ceil(x * p)

else:

x = x - math.ceil(x * p)

return bits

# What happens if you give a bitstream of length 60 or more?

# Does it decode correctly? Why?

Extending to Arbitrary Alphabets

Now it is important to see the bits in the above discussion as a set containing just two symbols . This set is called as the alphabet set. The above discussion pertaining to binary alphabets can be extended to any alphabet set of elements with an associated probability distribution over all its symbols. Based on the previous discussion, given the range for the encoding as (where ), which is a part of the natural number range , we ideally have to split the range into disjoint regions each with size proportional to the respective symbol probabilities. In other words, we want to split the range into where

$$ \begin{aligned} f_k &= p(s = a_k) M \quad k = 1, ...,K \

\sum_{k=1}^K f_k &= M \end{aligned} $$ These range sizes $f_k$ are called as _scaled probabilities_. To construct a coding scheme, we must ensure that $x_{i+1}$ is the $x_i^{th}$ value in the $k^{th}$ subset, given the symbol $a_k$. This is a direct extension of the idea discussed earlier for even - odd subsets.

Recall equation (3). It can be interpreted as follows essentially chooses the odd or even subsets and adds the necessary offset within that subset. Therefore, our required generalized mechanism can be written in the form $$ x_{i+1} = C_{ANS}(x_i, s_{i+1}) = \text{Offset } + \text{ Subset selection}

$$ Where $s_{i+1}$ is the input symbol that can take values in ${a_1, ..., a_K}$. One core insight here is that the values in the subsets of size $f_k$ are uniformly distributed. Each value within those subsets are equally likely. This makes our offset computation much simpler.

Let be the current state and the incoming symbol be . The offset can be calculated as The precise location within the subset is calculated by . This is because subset has a fixed size and using modulus helps us recycle the values within the subset. This step is essential to avoid a massive value for . However, this recycling destroys the uniqueness of the encoding. We must therefore, repeat the range of size over the natural number range (Refer the above figure). We can then uniquely identify the range of size within the natural number range by .

The subset selection part is pretty straightforward. Each symbol in the alphabet that occurs before occupies points on respectively. If we wish to select the subset, then its starting point is simply

This is essentially the CDF of the symbol as are now viewed as scaled probabilities. Putting everything together, we get the final ANS coding scheme.

Another way to think about ANS:

A popular intuition for ANS is to think in terms of range encoding. This is built upon the fact that equation (3) can use any base and not necessarily 2. Additionally, it can also change its base at each time step and still encode all the information faithfully. The decoding can be done correctly if done on the same base as encoding for that step.

The state represents an encoding of the scaled probability . Since the state is already an encoding, we decode it with the base . Recall that each subset is uniformly distributed and therefore, we can simply decode akin to equation (6).

Where represents the information associated with within the subset. For each symbol, we don't keep track of the symbol itself, rather its scaled probability (base). To get the positional information of the subset, we similarly follow equation (13).

The second and final step is to encode this information within the finite range , again assuming that it is uniformly distributed. Since we are encoding the subset range within the given finite interval, this technique is called as range ANS or simply rANS.

Decoding can be done simply by inverting each of the encoding components. The latter part of the above equation can be computed easily through pre-computing the inverse cumulative distribution function. This can understandably be non-trivial to compute in some cases. In such cases there are more sophisticated techniques available. In this discussion, we shall stick to a simple iterative method to find the symbol that satisfies the above constraint.

Implementation

class rANS:

"""

Bit Tricks:

(a << b) <=> a * (2 ** b)

(a >> b) <=> a / (2 ** b)

(a % (2 ** b)) <=> (a & ((1 << b) - 1))

"""

def __init__(self,

precision: int,

alphabet: List[str],

probs: List[int],

verbose: bool = False) -> None:

"""

Implements the rANS as a stack.

Encoding and Decoding are named as 'push'

and 'pop' operations to be consistent with

stack terms.

Usage:

rans = rANS(precision, Alphabet, probs)

rans.push(symbol)

decoded_symbol = rand.pop()

"""

assert precision > 0

self.precision = precision

self.verbose = verbose

self.Alphabet = alphabet

scaled_probs = probs * (1 << precision)

cum_probs = np.insert(np.cumsum(scaled_probs), 0, 0)

# Make sure everything is an int

scaled_probs = scaled_probs.astype(np.int64)

cum_probs = cum_probs.astype(np.int64)

self.probs = dict(zip(A, probs)) # Python 3.7 Dicts are ordered

self.fk = dict(zip(A, scaled_probs))

self.cdf = dict(zip(A, cum_probs))

self.x = 1 # State

def push(self, symbol: str): # Encode

"""

Coding formula:

M floor(x / Fk) + CDF(symbol) + (x mod Fk)

:param s: Symbol

"""

if (self.x // self.fk[symbol] << self.precision):

self.x = ((self.x // self.fk[symbol] << self.precision)

+ self.x % self.fk[symbol]

+ self.cdf[symbol])

def pop(self) -> str: # Decode

"""

Decoding formula:

symbol = (x mod M) such that CDF(symbol) <= (x mod M) < CDF(symbol + 1)

x = floor(x / M) fk - CDF(symbol) (x mod M)

:return: Decoded symbol

"""

cdf = self.x & ((1 << self.precision) - 1)

symbol = self._icdf(cdf)

self.x = ((self.x >> self.precision) * self.fk[symbol]

- self.cdf[symbol]

+ cdf)

return symbol

def _icdf(self, value: int) -> str :

result = None

prev_symbol,prev_cdf = list(self.cdf.items())[0]

for symbol, cdf in list(self.cdf.items())[1:]:

if prev_cdf <= value < cdf:

result= prev_symbol

prev_symbol,prev_cdf = symbol, cdf

return result if result else self.Alphabet[-1] # Account for the last subset

Conclusion

We have discussed ANS from the fundamentals of information theory and hopefully with some good intuition. We have however, ignored one crucial part which makes ANS practical. It is easy to observe that as the stream of symbols becomes longer, the range required to encode them also increases exponentially (in the order of where is the number of symbols in the stream). This can quickly become infeasible. This can be resolved through a process called renormalization where is normalized to be within some fixed range in some appropriate precision[3]. In a future post, we shall revisit ANS from a practical perspective.

References & Further Reading

For a detailed discussion of all the above described methods, refer James Townsend's PhD thesis. Additionally, his tutorial provides some implementation details of ANS along with a Python code.

Another accessible article on ANS was written by Brian Keng.

For an authentic source Jarek Duda, the inventor of ANS, has published a very nice overview on ANS.

A detailed tutorial on ANS is available on YouTube by Robert Bamler of the University of Tübingen as a part of his course on "Data compression with deep probabilistic models".

| [1] | One drawback of Huffman coding is that it often fails to achieve the optimal codeword length as the Huffman codewords can only have integer lengths. For Huffman coding, the codeword length is lower-bounded as . Therefore, for each data, there is a maximum of 1 extra bit in the corresponding Huffman codeword. For samples, the compression has extra bits, which is highly inefficient. |

| [2] | A better example to understand amortization is to encode a list of decimal numbers say [6, 4, 5] as 645 (same as coding in base 10) and checking how the binary representation changes with changes in values. [7,4,5],a change in one value, results in an very different encoding (745) than for [6,4,5]. We can understand this as the information in each input symbol is distributed or amortized over the entire codeword. |

| [3] | Renormalization is required for almost all streaming entropy coding methods like Arithmetic Coding for the same reason - to normalize the codes to be within some finite representable (on the floating-point number line) range. |